In a significant development concerning artificial intelligence (AI) technology used for security purposes, the US-based weapons scanning company, Evolv Technology, has come under scrutiny for misleading advertising. Following a series of investigations, it has been revealed that the company’s AI scanners, installed at the entrances of numerous schools, hospitals, and stadiums across the United States, do not possess the capabilities they have claimed. A proposed settlement with the US government aims to prevent Evolv from making unsupported claims about their products in the future.

Initially, Evolv Technology marketed its AI scanners as capable of detecting all sorts of weapons, a claim that was later debunked by investigations conducted by the BBC. The Federal Trade Commission (FTC) has since stated that the company must halt such claims, signaling a vital warning to other AI firms about the necessity of substantiating their technological efficacy. Evolv maintains that it has reached an agreement with the FTC but asserts that it does not admit to any wrongdoing.

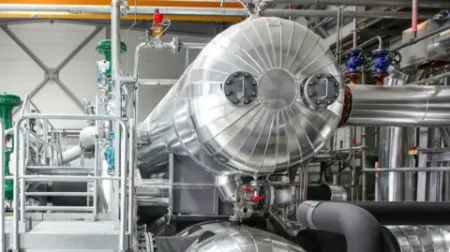

The director of the Bureau of Consumer Protection at FTC, Samuel Levine, emphasized the importance of backing up claims regarding technological advancements. This is particularly relevant given the growing reliance on AI in various sectors, including security. The mission of Evolv Technology is to replace traditional metal detectors with its advanced AI weapons scanners, which are pitched as capable of swiftly and accurately identifying concealed weapons such as bombs, knives, and firearms.

Despite promises of advanced detection capabilities, the FTC’s complaint argues that Evolv has been deceptive in its marketing by asserting that their scanners could find “all weapons.” Moreover, previous reports highlighted that the company’s scanners fell short of delivering reliable detection results for firearms and explosives. Notably, testing undertaken in 2022 backed by a freedom of information request unveiled inconsistencies in the effectiveness of Evolv’s technology.

The skepticism surrounding Evolv Technology has been further amplified due to incidents involving security failures. A recent stabbing in a New York school where Evolv’s scanners were deployed brought the system’s reliability into sharp focus, with a school superintendent asserting that the scanners “truly do not find knives.” The concerns have only intensified with revelations that Evolv’s claims about the UK government endorsing its technology were also unfounded.

Under the terms of the FTC’s proposed settlement, Evolv will be barred from making unsubstantiated claims about the detection capabilities of its products. Furthermore, the settlement grants certain school clients the option to terminate their contracts if they choose. This resolution is set to undergo judicial approval in order to take effect.

Evolv, through its interim President and CEO, Mike Ellenbogen, expressed that the company had collaborated with the FTC throughout the inquiry. He clarified that the investigation focused on past marketing practices rather than the actual effectiveness of the technology itself. Ellenbogen asserted that the FTC had not challenged the core functionality of Evolv’s security solutions, underscoring the significance of emphasizing accurate marketing in the fast-evolving AI industry.

The ongoing situation has raised broader questions about the veracity of claims made by AI firms. Regulatory authorities in both the US and the UK are increasingly vigilant about companies overemphasizing the capabilities of AI, often leaving consumers bewildered regarding the actual applications of such technologies. In response to the growing concern, the FTC has initiated “Operation AI Comply,” a campaign aimed at scrutinizing and addressing false claims related to AI technologies.

The cases surrounding Evolv Technology highlight the urgent need for accountability and transparency in the marketing of AI products. As the technology becomes more embedded in public safety and security, the ramifications of misleading claims could not only expose vulnerabilities in systems but could also compromise public confidence in emerging technologies. Moving forward, both regulators and companies need to work collaboratively to foster an environment that prioritizes truthful representation and customer trust in AI advancements.